Taking on the Boloverse: Does a sentient tank need a crystalline brain?

Last week, I asked Tom Kratman: are giant tanks outdated? This week, Tom and I talk about his giant tank novella Big Boys Don’t Cry. And I ask: does a sentient tank REALLY need a crystalline brain? And can you tell a brain from a computer by sight alone?

Tom and I are collaborating to turn his novella Big Boys Don’t Cry (BBDC) into a novel. We’ve been talking about the technology, in part because it’s a critique of the Bolo series of novels from the 1960s onwards. I remember thinking when I first read BBDC that, although the philosophical ideas were excellent, some of the technology felt old hat.

A brief rant about SF hardness

I have a bugbear about old tech in new stories. It’s probably because I first read BBDC in 2015 when it had a Hugo Award nomination. There’s a long-running problem of nostalgia, almost creative exhaustion, in award-winning sci-fi and fantasy.

the genre has become a set of tropes to be repeated and repeated until all meaning has been drained from them.

This has changed a bit since Kincaid wrote his Widening Gyre review in 2012. Today, the same tropes will often be subverted with a splash of critical theory. For example, they might be deconstructed to show how they perpetuate oppression in society.

Either way, there’s now a noticeable disconnect between Anglo-American sci-fi that’s winning major prizes, and science & technology. It’s noticeable how few sci-fi stories deal with synthetic biology, for example.

This is in contrast with, say, cyberpunk. William Gibson, author of Neuromancer, got many of his new ideas by using new tech to predict cultural changes.

What’s this to do with Bolos?

When I read BBDC for the second time, I noticed a couple of what felt like technological anachronisms. So, when I started talking about the collaboration with Tom, one of my concerns was fixing them.

The most noticeable example is in Chapter 8 of BBDC:

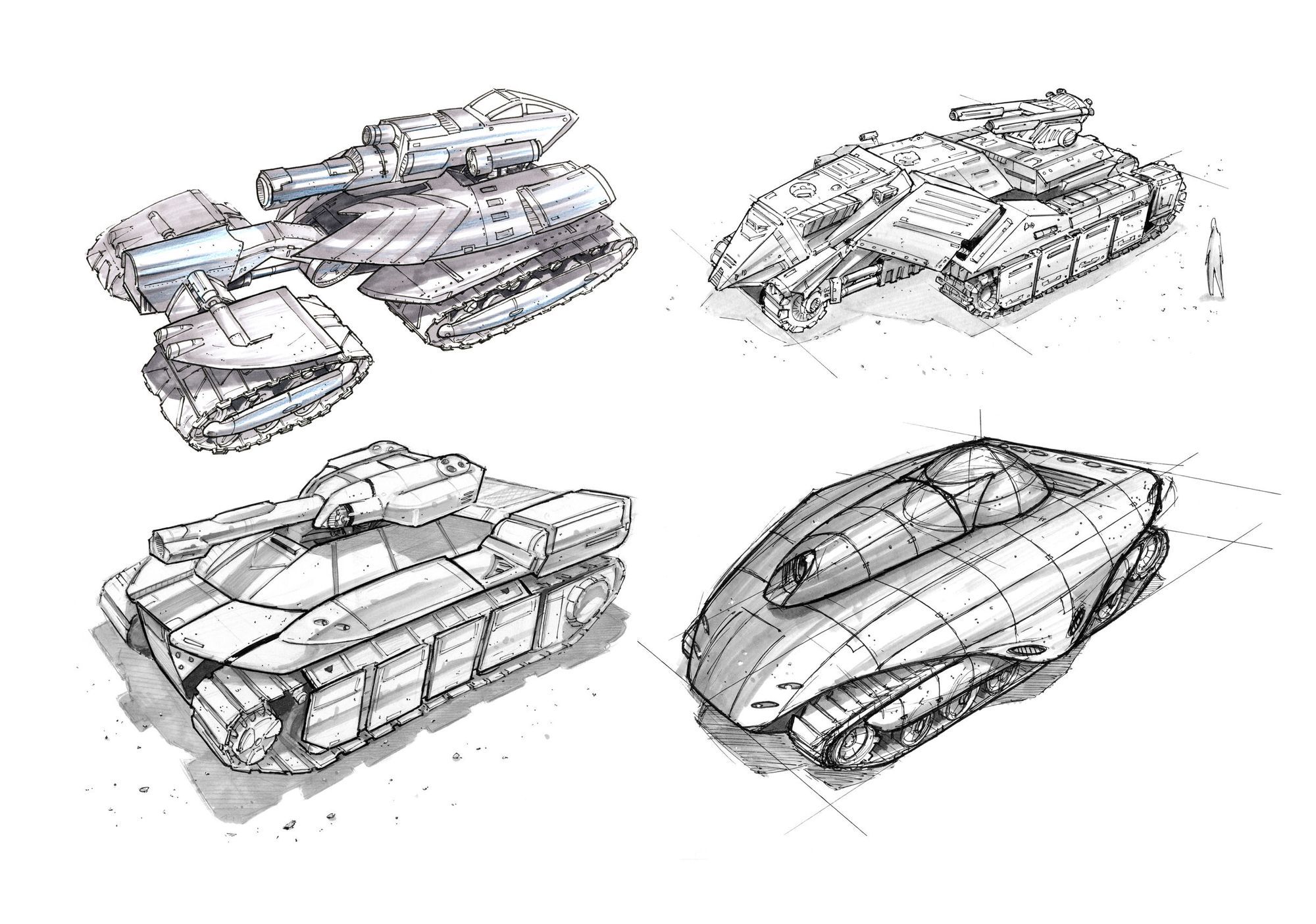

I had questions. First, I imagine the vehicle loading crystalline brains as a cross between a Star Wars landspeeder and this Travis Perkins cargo truck.

Which raised the question: Why do you need a (human?) driver for an antigrav truck? Toyota demoed an automated forklift in 2018.

And then there were… the crystalline brains of the giant tanks.

The Trouble with Crystalline Brains

This technology for the Ratha AI was just baffling to me. I emailed Tom who replied (I’ve edited our conversation): I seem to recall thinking, while writing BBDC, that the reader needs to see a solid physical object we call a brain in order to see the Ratha as sentient beings.

I wrote: I’m sure technology like ‘crystalline brains’ seemed fine in the 1970s when Laumer wrote the Bolo novels, but computing has come on a lot since then. There are already a wide range of well-known existing or proposed technologies for running an AI. Recreating Bolo-esque AI tech is like rewriting Asimov’s Caves of Steel and retaining the expressways. When Asimov wrote the book in the 1950s, they were innovative and far-fetched, but today we have these fucking things IN AIRPORTS.

In my experience, people don’t associate sentience with a physical brain. Let’s try a thought experiment. You have a robot dog that chases balls for his elderly owner and curls up on the bed to watch TV when the old guy’s sick. When the old man dies, the robot dog licks his face persistently until his battery runs out… That’s going to read as sentient, whether or not the reader gets to open up the dog’s head case to find a brain.

Tom replied: In the first place, we’re stuck with them. But in the second place, you will recall that some brains fail? They’re grown like that to be random, not entirely logical, more people-like, hence more trainable and ferocious, once trained. Some are unsuitable, as one would expect from a random process. This is key; as sentient beings they are not just programmed; they are trained. Also, these things are going to take some external pounding, so the brains cannot be delicate. Solid, spherical, and crystalline tends to fit that.

A Brief Introduction to Today’s AI

The word that stuck out here (in fact, Tom bolded it) is TRAINING.

One of the biggest developments in artificial intelligence over the last thirty years is machine learning. This normally involves artificial neural networks, which are computer models based (originally) on the human brain.

These models consist of thousands or millions of artificial neurons, which are logic models that receive multiple inputs. They mathematically weight these inputs based on prior learning and send an output downstream.

Neural networks are already used widely for specialist applications, such as medical image recognition. For example, a neural network might be fed millions of images of cancerous tumours. The neural network will receive feedback on whether it correctly identifies images showing cancers.

Eventually, the network will be able to identify images showing cancers with a high degree of accuracy. At this point, it will have ‘learned’ to find cancers. Now, it can work on patient images, saving radiologists’ time to spend on more complex cases.

Neural networks capable of learning to spot the difference between, say, tomatoes and cherries, can sometimes do so without a human telling them what features to look for.

I wrote to Tom: Magnolia (the Ratha protagonist of BBDC) is trained by being exposed to the entire human history of warfare. She learns to fight, or die, and continues to command/fight until she wins every battle. You were right on the mark about the Ratha training program having to have incentives/disincentives and Magnolia getting pleasurable feedback from killing things/achieving goals. Positive and negative feedback is how you train today’s neural networks.

THIS IS DEEP LEARNING.

Do AIs play Wargames?

Imagine a modern-day machine learning algorithm trained on, for example, Panzer Corps or Medieval Total War. You could subsequently task it with remote controlling a swarm of small tanks, drones and other weapons.

You could also train the algorithm without a simulation. In this case, you’d give it thousands of real-world historical military decisions and tell it which ones led to victory, and which to defeat.

Tom replied: I think I’ve mentioned to you that AI for wargames is laughably easy to defeat. They achieve what they do in games by what amounts to cheating. However, from using fairly cutting edge military AI games, I am unconvinced that civilian games are any more stupid than they are. Even a fairly dumb human player will, most of the time, be a tougher fight than the AI. And I really doubt that will change as long as we use mere logic in something – war – that remains largely an exercise in emotion and character.

Did I ever tell you about the night I didn’t shoot someone?

Kuwait City 1991. The Kuwaitis had set up phone banks so their gallant liberators could phone home for free to let their families know they were okay after the offensive. I was there with a friend of mine when a stay behind (or a Palestinian) drove by and let loose a magazine.

I don’t think he was really trying because he didn’t hit anybody. However, suddenly, there were people from 27 or so different armies running to defend the perimeter. Many of them, notably the Arabs, were un-uniformed.

I told my friend: “Nah… let’s stay here. Then we can go to wherever there might be an attack.” This sounded good to him. The war had been, so far, a big disappointment for lack of action. So, we waited there, back-to-back, watching out.

From my side, I saw an Arab in a red robe with an AK moving towards the centre, and from shadow-to-shadow, there being no cover to speak of. To myself, I said, “Self, that fucker is too good. He can’t be one of ours.” And so, I drew a bead on him and was squeezing the trigger when… I don’t know what.

He did something I still can’t recognise that indicated he was a friend, not a foe. I let the pressure off the trigger and he joined us, guarding our backs. Let me know when an AI can respond to signals so subtle that the receiver doesn’t even know what they are.

The ‘Ghost’ inside the Chess Computer

I replied: Ah, okay. Our understanding of AI has changed dramatically over the last five years. In the 1990s, a chess computer was a bit of software running on a box that sat on your desk.

When you played chess against the computer, it ran a piece of software that some poor sod had programmed. The computer went line-by-line through a program something like:

IF Queen to D6, check all adjacent squares (well, not quite like that, but that’s the basic idea).

Each time you made a move, the chess program would check every square. It would then brute-force calculate all the possible moves for the rest of the game. The limit to the computer’s ability to play chess was the speed and power of the computer.

This isn’t how humans think. At some point, in the last five years, there’s been a breakthrough in neural networks, which means they no longer act like 1990s chess computers. Many people don’t know about this, which is fair dos, but ignoring this breakthrough is a problem if you look like you might be writing seriously about AI.

Within the next 5-10 years, we will have machine learning ‘algorithms’ that will be indistinguishable from humans in doing specialised tasks. Machine learning models like Google’s DeepMind AlphaZero are already able to learn to play chess ‘like a human’ by playing millions of games against themselves. DeepMind’s AlphaStar AI, meanwhile, can win against 99.8% of Starcraft II players. Elon Musk’s OpenAI beat 99.4% of human players at Dota 2.

These are big, online strategy, war or battle games.

One AI even found a new way to win an old Atari game.

None of these AIs were ‘programmed’ in the conventional sense of a line-by-line set of instructions. The only coding was the initial brain simulation. They learnt in exactly the same way as a human might learn – by encountering lots of different situations, and finding out if what they did worked or not.

How to Train a Giant Intelligent Tank

A neural network is a computer program that simulates individual neurons in the human brain (or, at least, is loosely inspired by them). If you have billions of these artificial neutrons in the same configuration as a human brain, you’d be running a simulation of a human brain on a computer.

Now, let’s imagine that you got that complex neural network, or a whole series of neural networks, and you put them inside the body of a tank. You could link the network to the tank’s sensors. You could train the tank to successfully navigate obstacles, fight guerrillas, work out military strategies, and even develop an effective style of communication to talk to human soldiers.

Just like the training scenarios in BBDC, you might complete the initial training with your simulation of a brain inside a simulated tank in a simulation of a historical battle. You’d only connect the tank’s brain to its sensors in the second part of the training process.

The people who developed this neural network wouldn’t have any idea what was going on inside the box. The intelligent tank could develop unexpected, novel and innovative approaches to problem solving, because it wouldn’t be ‘programmed’ to do anything in particular.

Twins within the Digital World

In BBDC, Magnolia’s brain is trained using simulated data from historical battles. Training an AI using a simulation is a real, emerging technology. Many new drugs are grown inside cells, which are grown in vats called bioreactors. It’s bit like how beer is produced in vats containing yeast. I’ve written several news stories about how (simple) neural networks are being trained to run these bioreactors. They are often trained using a virtual bioreactor – a simulation of a real vat. These ‘virtual’ bioreactors are called Digital Twins.

Randomness and Crystalline Brains

Given the sheer number of possible inputs, the training process for a complex AI would produce pretty ‘random’ results. In fact, the finished neural network/AI might end up behaving like a sentient being, as though there was a ‘ghost inside the machine’. In particular, because the AI was responding to inputs and feedbacks, it wouldn’t necessarily behave in the way the designers expected. We’ve already seen this with ‘racist’ AIs.

The fact that the AI is a simulation of a human brain running on a computer, incidentally, is why the hardware speed no longer matters. It’s the ‘architecture’ of the neural network that matters – not the architecture of the computer running the simulation. You could run the AI on a pocket calculator, or on a quantum computer in a Dyson sphere 2,000 years in the future. It’s still a simulation of a human brain.

Either way, you don’t need a crystalline brain to run a neural network. You could theoretically run Magnolia on present-day computers, which use silicon-chip technology. The hardware needs to be resilient against radiation, but we already blast probes into space.

Do Androids Weep Over Electric Sheep?

He replied: Point of clarification. A computer is an idiot that counts on its fingers very quickly. Maggie has a crystalline brain.

A brain has emotions, probably in part because it is randomly grown. Emotion enslaves reason, generally, but also usefully, to give us poetry and prose. Hand-eye coordination may enable Michelangelo to carve David, but emotion and a touch of madness rule those hands to create high art.

If I’ve never recommended it before, I invite your attention to Jonathan Haidt’s The Righteous Mind [NOTE: I first encountered this book after it was recommended by Tom 🙂 ]. I will also claim, primly and properly, to have realised the same things as are in the book twenty or so years before it was published. Nice when someone does the footwork to prove one’s instincts and reasoning are correct.

And, once again, from a literary point of view, and this is especially true for the court martial, I think the reader needs to see a physical brain, different from ours, but also different from a million of my tabletop computer motherboards wired together to identify with Maggie as a thinking, sentient person, and to see said person in gestation and ‘born’. Leaving aside that we’re stuck with it; if we were not stuck with it, we would still need to create a similar brain… for the reader.

How do you motivate a vacuum cleaner?

I wrote: Okay. Emotion and character are a function of motivations. Take my last dog, for example, who was obsessed with stealing dirty socks. He would expend much logical thought on devising plans for stealing them. He had non-human motivations, but entirely human logic.

A neural network, to repeat, is a simulation of the neurons in a human brain running inside a computer. It doesn’t intrinsically have motivation, but this is largely because current AI is simple.

However, we already have AIs that act like very simple forms of life. Computer viruses, for example, are programmed deliberately, but behave similarly to ‘real’ viruses.

Roomba vacuum cleaners can:

- Take instructions via an app to clean particular rooms on a certain schedule;

- Move around the house cleaning, while avoiding obstacles and without repeating themselves;

- Look for a charging point and plug themselves in to recharge when they run out of battery.

If an ancient Roman visited an apartment with a Roomba, they’d have no way of knowing that it wasn’t ‘alive’ or ‘sentient’. At least, as much so as, say, a fly. After all, the Roomba has self-guided actions, sometimes makes mistakes and, when it gets hungry, it ‘hunts’ for ‘food’.

If you make the Roomba’s technology steadily more complex, you can imagine an entity that displays ‘desires’ more complex than ‘seek a power source’. At this point, how do you know that entity doesn’t have real passions?

How can our Roman distinguish, just by observation, between a Roomba looking for power and an animal looking for food?

Your friendly, personalised Roomba

You don’t need a crystalline brain to create randomness in neural networks. Every Roomba will have slight differences in how their sensors are set up during production. This is inevitable – you don’t need to deliberately introduce this. They might navigate your house differently. One Roomba might be more or less likely to collide with chairs, for example. This is a very basic form of ‘personality’.

It’s the same simple ‘personality’ you see in young children. Like Roomba, young children have different ‘sensor’ setups during ‘manufacturing’. We call this, in humans, nervous system development. My younger son seems to have a more reactive nervous system than my older one. In the womb, he was more likely to jump/twitch in response to the dog barking. As a four-month-old baby, he’s much less active and confident than my older son.

Octopus or Giant Tank?

Today, of course, we only have simple neural networks that can learn to play chess or recognise cancerous tumours. But I can imagine, in the future, you might chain neural networks together into a complex network. For example, a giant tank might be controlled by several neural networks. One may have learned to control guns. Another may have learned to navigate the tank over obstacles.

I could even imagine a Ratha having multiple neural networks spread all over its body. Rather like octopi, which have separate brains in each tentacle [NOTE: I know a lot about octopi because my older son watched the same 20-minute octopi documentary 15 times in one day]. There’s no doubt that multiple neural networks, connected together, would develop ‘quirks’, ‘malfunctions’ or… ‘personality’ – depending on who was on the receiving end.

What is the Measure of a Machine Learning?

All this raises the question as to how you’d distinguish a sufficiently-complex neural network from a conscious thinking machine. Would a sufficiently complex neural network BE a conscious thinking machine?

At the most simple, a neural network that wins at chess looks ‘competitive’. A Roomba that looks for a power socket seems ‘hungry’. A tank running a neural network might display competing ‘desires’. For example, is it more important to look for fuel right now? Or should I shoot that bunch of guys hiding in a nearby barn? It’s also possible, due to differences in training and initial setup, for different neural networks to develop different responses to that question.

The Physical Architecture of a Thinking Machine

Tom replied: Magnolia is conscious, in part, due to the random nature of her brain. What the brain looks like inside is probably something like ours, but in a crystalline-metallic vein. I think I showed in the description that we were growing them around a neural network. The randomness in construction, as with human brains, is going to give them human-like tactical responses, once properly trained. Conversely, if you are just programming them via showing them skits and such, it can only be because they are intellectually insufficiently human.

How do you make AIs individuals?

I responded: I don’t understand the randomness in construction thing. Do you mean that you don’t want the Ratha to be clones of each other? Ultimately, Roomba are clones. Strong deviations from the basic design will lead to them being weeded out in the manufacturing phase.

Laumer’s Bolos had different personalities. Each Ratha in BBDC has a different personality. Your real concern seems to how to create individual Ratha if they are manufactured on production lines like a Roomba. Will training the same neural network with the same data produce different results? Certainly, there are differences between the behaviour of algorithms for the same reasons as people, e.g. bias and poor image recognition.

If you think about how humans end up different, it’s not due to the brain. It’s down to genetic recombination and the environment. When the DNA in sperm and eggs comes together during fertilisation, DNA from father and mother randomly swap places. This creates a unique new person (see nifty cartoon video). The child’s destiny is shaped further by the conditions they encounter in the womb.

A neural network installed in a giant tank would need to learn to do even simple things – such as operating its guns. In humans, this process is called ‘childhood’.

Learning for Baby Tanks

As an example of this process in humans, let’s take this standard ginger baby (below). He has motivations to reach and manipulate objects in his immediate environment. Over several months, he’s been motivated to learn he:

- Has hands and feet;

- Can control his hands and feet;

- Can’t control every object by thought alone;

- Can use his hands to seize and manipulate objects that aren’t a body part;

- Can use his arms and legs to bring him closer to the object to manipulate.

He’s currently working on how to get closer to objects using a process called ‘rolling’. He will, hopefully, move onto crawling, walking and – later – running.

Google’s DeepMind division have already taught an AI to go through the learning process that this baby will hopefully complete over the next few months (below). You can imagine putting a complex AI through a similar training process, but with a side order of military tactics.

Optimising the Training Process

You could vary an AI’s ‘personality’ by changing the training process. Perhaps the training process could ‘self-learn from feedback’ to create random variations in the Ratha. Over time, the training would optimise them to fight.

Humans might discard/destroy suboptimal Ratha during training. Dog trainers, for example, ‘discard’ working dogs that ‘fail’ their training to pet homes.

I can also see a role for human involvement in the training process. Once you have a self-learning algorithm training self-learning robots, you have a recipe for ‘robots that take over the world’. Or, at least, a process that can go horribly wrong. One example is the Paperclip Maximiser. This is an AI that would destroy humanity by transforming planet Earth into paperclip.

Birthing a Thinking Machine

Tom wrote: Are you trying to wear me down? It’s not going to work. Going to be a big, spherical, silvery brain, built randomly, around a frame of what appears to be main neural connections. Can be quantum. And you can also arrange for it to assemble itself from random bits of whatever in a vat… around a bunch of thin rods emanating from the centre…

I replied: When you say ‘assembled itself’, do you want the crystalline brain to be a physical structure that replicates the architecture of a neural network? Magnolia the tank can’t have children and isn’t ‘born’ like a living creature. If she assembles her own brain, is she:

- A neural network who creates a physical copy of her own brain architecture? Or;

- A neural network that runs on a few physical neurons. Later, it directs robots to build more physical neurons in a feedback loop?

If Magnolia made a physical copy of her own brain, she wouldn’t be cloning herself. You’d be creating a naive new network that had to relearn to interact with its environment (more like creating a child than a clone).

Magnolia could build herself using self-assembling robots. Simple, experimental, self-organising robots are already a thing.

The question I have is:

What are the benefits of a neural network with physical architecture over one running inside a computer?

It could be, of course, that you want some sort of mechanical component to the Ratha’s brains. After all, silicon-chip computers are entirely digital while the human brain is actually an analog-digital hybrid. However, if you want the Ratha’s brains to be more ‘brain-like’, they could always print themselves biological brains using 3D bioprinting. I appreciate this may not be useful for extreme environments. However, the blurring of what is biological and not is a major future trend.

So, although there may be giant tanks in 300 years time, their brains probably won’t look like Laumer’s Bolo…