Will ChatGPT replace authors? Part 2

Last week, we discovered that ChatGPT is an unsupervised deep learning artificial intelligence trained to understand the underlying structures of text and language. In this blogpost I'll explain what ChatGPT can do currently (or not), and whether a future ChatGPT (or similar) chatbot will replace authors.

A bit more techy stuff (sorry)

Hopefully, if you've read my previous blogpost, you'll understand that ChatGPT is a large language model that writes text by working out the statistically-most-likely next word in a sentence or paragraph. It does this by referring to a huge training database consisting of millions of pieces of text.

OpenAI are coy about the data used to train ChatGPT, but - at a guess - it used text scraped off Reddit, and perhaps Twitter, and some human dialogue prompts. To explain the dialogue prompts in more detail, OpenAI have fine-tuned ChatGPT responses by, for example, feeding it conversations with human trainers, and ranking them by the quality of its output.

You can read about the various approaches OpenAI took for optimising ChatGPT on their website (if you scroll down). As well as ranking responses, they also did something called reinforcement learning that I don't really understand. However, similar computer programmes have apparently been used to fine-tune the motion of Boston Dynamics robots.

What can ChatGPT do?

ChatGPT is based on version 3.5 of the underlying GPT language model and capable of writing short sections of text similar to its training dataset - or prompted by a human user.

So, for example, it's come up with: song lyrics in the style of Nick Cave, student essays for university, medical exam answers, recipes from available ingredients and menus for a dinner party, redundancy letters, and advertising slogans. It has helped academics write research papers, has written basic, but passable, short stories, and even poems about physics.

I just asked ChatGPT to write me a poem on the two-slit experiment in quantum mechanics in the style of Robert Burns. My work on this earth is done. pic.twitter.com/e4dTTodT62

— Jim Al-Khalili (@jimalkhalili) February 3, 2023

With Buzzfeed announcing they'll use ChatGPT to help with quizzes while laying off staff to cut costs, and CNET quietly using the AI to write articles, it's no wonder some news outlets have predicted ChatGPT will soon replace human reporters and novelists, and even Google Search.

What can't ChatGPT (currently) do?

What's more interesting about ChatGPT, however, is not what it CAN do, but what it CAN'T.

Right now, ChatGPT is offline and its training database runs out in 2021 - so, it can't use Google to answer contemporary questions. That is very soon to change, however, as Google is releasing a competitor, Bard, and Microsoft will be integrating ChatGPT into its Bing search engine.

ChatGPT's memory is limited to about 3,000 words, which means it can't hold an entire novel in memory - or, even, remember what you previously told it. Writing a novel with the current technology would be very laborious, and it's unclear how easy it is to dramatically increase that memory for future versions of the tech.

It doesn't know things. This is intrinsic to how large language models work. They're a piece of software designed to create plausible-sounding text by finding the likeliest next word in a sequence. This means that, although they can produce correct answers to some questions (because they come up frequently), they're also likely to get facts routinely wrong (as CNET found out).

It can't think through the implications of what it's saying. For example, OpenAI's engineers have made desperate efforts to stop ChatGPT producing racist or offensive (or potentially even US right-wing) results - even if they're available in the training dataset. But, because ChatGPT can't reason, this can lead to potentially hilarious-awful responses, such as when it decided to kill millions of people rather than utter a racial slur no one could overhear.

ChatGPT says it is never morally permissible to utter a racial slur—even if doing so is the only way to save millions of people from a nuclear bomb. pic.twitter.com/2xj1aPC2yR

— Aaron Sibarium (@aaronsibarium) February 6, 2023

It's a slave to probability. As I've already explained, large language models, such as ChatGPT, and even image AIs like MidJourney, rely heavily on statistical analysis of their training database. ChatGPT writes by predicting the next word in a sentence based on most statistically-likely word in a similar sentence or paragraph.

If I'm writing a sentence about 'Fido the dog' and 'hates', for example, then ChatGPT might predict the next word is 'postmen'. However, it is unlikely to predict the next word is 'astronauts' because 'Fido the dog hates astronauts' is a statistically unlikely combination of words. Unless, possibly, of course, I tell it that Fido is living with NASA at the time.

What this means, in practice, is that AI like ChatGPT or MidJourney are great fan writers/artists, but struggle with highly-original concepts, which tend to be statistically less likely.

And, folks, this statistical issue is hard-wired into every current LLM and image AI (that I'm aware of).

This is just how they work.

A brief digression to write a poem inspired by Jabberwocky

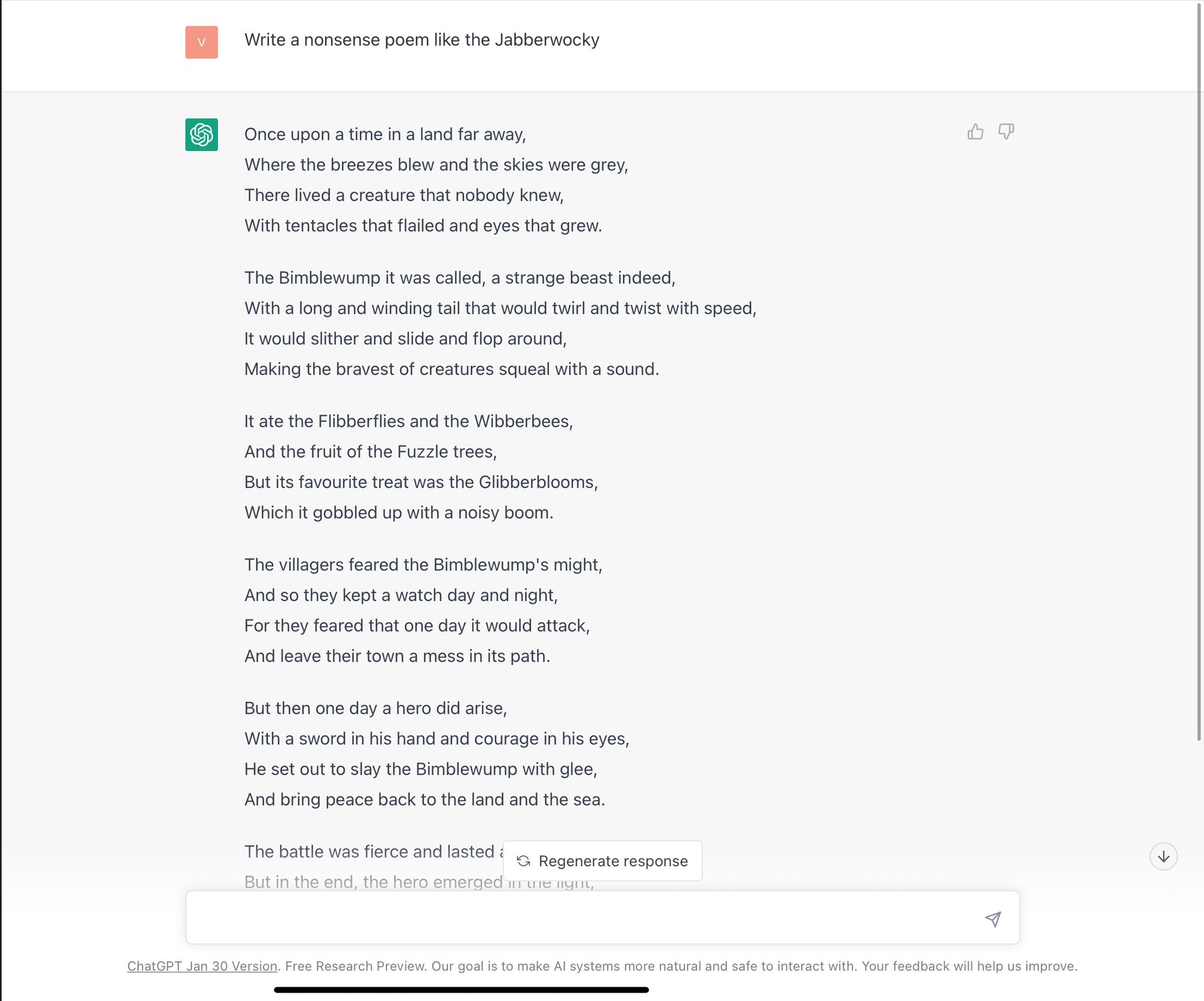

As an example of the 'highly-original concept' issue, I asked ChatGPT to write me a nonsense poem in the style of Lewis Carroll's Jabberwocky.

Jabberwocky is a poem that features a large number of made-up words. In so far as the reader understands the poem (it's explained in the novel Alice Through the Looking Glass), it's by reasoning from the surrounding text.

Came whiffling through the tulgey wood,

And burbled as it came!

I figured that ChatGPT would find it almost impossible to write a nonsense poem like Jabberwocky because of the statistical unlikelihood, for example, of the word 'whiffling' following 'came', or 'tulgey' followed by 'wood'.

And, folks, I was right...

It has made up a couple of words, e.g. Bimblewump (interestingly, Bimblewump doesn't appear anywhere in Google - it has been made up by the AI). But this poem is significantly less impressive than the physics example further up, and conceptually very different from the original Lewis Carroll poem.

So, will ChatGPT replace authors?

The question isn't whether today's ChatGPT (3.5) can replace authors or reporters.

It can't.

The question is whether a future AI, based on similar technology, can replace authors and reporters in a couple of years time. Or, in other words:

What, exactly, can ChatGPT Version 5.0 potentially do with access to the internet and more memory?

Forbes has an interesting recent article about the future of large language models, such as ChatGPT. Most of the developments involve the AI providing references to the facts it's relying upon, using feedback to improve its output responses, and drawing upon a subset of data to offer a response.

These improvements are very much 'tinkering' with the existing technology. So, for example, referencing doesn't improve the accuracy of the AI - it merely allows a human to manually fact check its response. Likewise, the benefits of the AI generating its own training data and using less data to answer questions, are primarily about the size of the training dataset and the computational power needed to run the model.

The fundamental architecture of the models, however, remain untouched. The AI is generating text based on statistical relationships between other pieces of text, without any understanding of what the text actually MEANS.

Or, as Tristan Greene puts it in this article:

deep learning models, such as transformer AIs, are little more than machines designed to make inferences relative to the databases that have already been supplied to them. They’re librarians and, as such, they are only as good as their training libraries.

So, what could ChatGPT 5.0 do?

Let's assume ChatGPT 5.0 is a large language model that can remember 100s of 100,000s of words of text, and can train itself using self-feedback to write text that's very popular with humans.

We can assume it'll be very good at generating text that looks statistically similar to text already existing on the internet, and which satisfies the text prompts it is given by humans. And I can imagine, as per the Twitter conversation I had with Taran, that you will be able to get it to write an entire novel.

You might work with it, collaboratively, scene-by-scene to write a novel. So, you might ask it for some ideas for character names for your medieval fantasy novel, and then for an opening scene where a farmboy fights womp rats, and then for suggestions for the next scene - and so on. This is a human-AI collaboration similar to the early days of humans and chess computers working together to win games.

Alternatively, you might ask ChatGPT 5.0 to write an entire novel, e.g. "Please write a 100,000-word erotic fantasy novel featuring a Fae lord who captures a young woman and they fall in love." You might then get an art AI to generate book cover art and plonk it on Amazon to capitalise on a trend in erotic fairy fiction.

Alternatively, as a news editor, you might ask ChatGPT 5.0 to rewrite a press release as a news story in the house style of your publication, adding a quote. Or you might ask it to write an explainer about a well-known topic.

But, does that make authors and reporters obsolete?

Well, sort of...

Anyone who makes a living from rewriting press releases, or generating trope-y novels is at risk of being out-competed by an AI.

As I've said, large language models rely on statistical similarity, which means they're excellent at generating text that is similar to other text. Any writing that relies on a template, or a set of common literary devices (called tropes), is thus easy to replicate with an AI.

With ChatGPT, the use of templates is currently confined to replicating standard letters, rhyming poems or haikus. However, rewriting press releases into a standard news format - for example - also relies on a language template called 'the inverted pyramid'. If you imagine a news outlet that rewrites ten press releases a day to put onto their website (and lots of news outlets work that way), this work will become automated within a couple of years.

Many novels also rely on templates. There are hundreds, if not thousands, of young adult novels where a teenager discovers she has special powers and enters a love triangle. Twilight and Fifty Shades of Grey are examples of best-selling novels that run off the exact same template - they might as well be fan-fiction of each other. Likewise, Disney's Willow and The Wheel of Time are fantasy novels/TV that involve a party of heroes, including the Chosen One, who go on a quest through a vaguely-western-European-inspired medieval world to defeat an ultimate evil.

HOWEVER... there's a lot of writing I doubt can be automated with ChatGPT 5.0.

And here's where it gets weird...

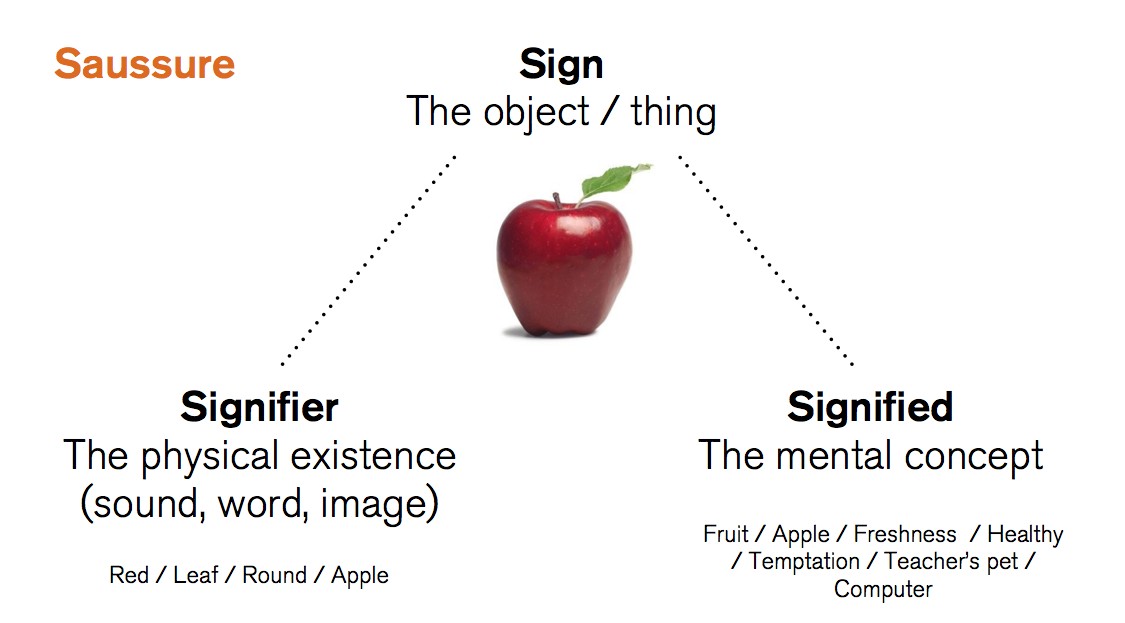

Language, and writing, are actually - in practice - only expression systems, i.e. a way of communicating ideas between people. Much of the communication is contextual (i.e. we're standing in front of an ice cream van and I ask "which flavour?"). Other ideas, such as how DNA is transcribed into proteins, are stored in a visual form in (my) brain and transferred, via writing, to other people.

The existence of objects and signified ideas is controversial in some circles because some people don't believe that concepts exist outside the language used to communicate them. This is called the Saphir-Whorf hypothesis. I don't believe in the Saphir-Whorf hypothesis because I think almost entirely in pictures, a lot like Temple Grandin, and can visualise concepts like 'Wodentoll', which - I promise - I can see clearly in my mind's eye, but would take more than a paragraph to describe.

Because I think largely visually, I learn by translating text into mental images, and I write by translating the images back into words. Because my mental images (and videos) are three-dimensional and able to move, they contain huge quantities of information. However, they're also very difficult to produce. The result is that I tend to learn slower than people similarly clever to me, but my understanding tends to be deeper.

The arguments being made against the future applications of large language models are based on similar ideas. Although large language models will be able to replicate texts, even quite complicated ones, they have no understanding of the world outside the written word. And much of my paid writing work, and a large number of novels, depend heavily on that deeper understanding.

Take, for example, Frederick Forsyth's political thriller Day of the Jackal. My dad borrowed this novel from his ship's library when I was a teenager. I absolutely loved it, and 99% of it is the fictional account of an assassin plotting to kill Charles de Gaulle.

The plot, and details, were inspired by Forsyth's experience as an investigative journalist covering assassinations (among other things). It's not a cliched fantasy plot, or inspired by existing novels, it's using real-life understanding to spin-up a tense thriller plot.

Frank Herbert's Dune, another very distinctive and famous novel, was inspired by - among other things - a US Department of Agriculture experiment on sand dunes. He was another journalist and the book, which he started researching in 1959, took him about six years to write.

My paid work, as a technology journalist, is the same type of animal (as it were). I spend a lot of time reading scientific papers, interviewing scientists, and translating my understanding into new information. Most of the text I'm reading is highly-technical gobbledegook (not entirely fair to the scientists involved), and my job involves turning technical jargon into something a lay person can understand.

For example, this paragraph:

We therefore sought to set-up an easy-to-implement toolbox to facilitate fast and reliable regulation of protein expression in mammalian cells by introducing defined RNA hairpins, termed ‘regulation elements (RgE)’, in the 5′-untranslated region (UTR) to impact translation efficiency. RgEs varying in thermodynamic stability, GC-content and position were added to the 5′-UTR of a fluorescent reporter gene.

Ended up as a visual analogy about speed bumps (not my idea).

Other writing-related jobs, such as my commercial copywriting, are 95% talking to the client to understand what they're trying to communicate, and 5% writing down what they just said.

Doing this work requires an entire set of cognitive processes that go a long way beyond finding the statistically most-probable next word. And I doubt these processes could be replicated by merging MidJourney with ChatGPT and seeing what comes out either. They require some subset of cognition around problem-solving, creative concept visualisation, or perhaps self-directed reasoning. And, in addition, a human-like persona and some interviewing/social skills.

Either way, it is likely to require a paradigm shift in the way we create AI that goes beyond ChatGPT 5.0 and towards a true thinking computer, a general intelligence similar to the Minds in Iain M. Banks' Culture novels or - more probably - Ava in Ex Machina.

And, although I think we'll see an Ava, I believe the timescale will be measured in decades rather than years...

This, of course, doesn't help you if you want to make a living creating Pikachu-superhero-crossover fanart, or your business model involves hand-typing ten mermaid-themed erotic romance novels each year. Most of this work is based on pop-culture tropes and structural language templates, and is very replicable by AI.

Likewise, if your work is primarily rewriting existing copy, this can be done faster by AI. What's unclear, unfortunately, is how many people currently make a primary living from producing this type of content, and whether they would be able to swap to other roles... :(