Will ChatGPT replace authors? Part 1

Much has been written recently about whether ChatGPT, a new artificial intelligence, will replace journalists, novelists and even written examinations. This concern is not entirely illegitimate. Pop media company Buzzfeed, for example, recently laid off 12% of its workforce while announcing a wider role for ChatGPT.

ChatGPT, a language-based computer program, has become extremely popular since the prototype was launched by OpenAI in November last year - with 100 million reported users within two months. Its popularity is due to its ability to respond to text prompts in a conversational style, including making creative suggestions.

In a previous blogpost, I experimented using ChatGPT to write the opening to my dieselpunk novel (and it was a bit rubbish). However, when I talked to authors about AI, arguing that ChatGPT has limited functionality right now didn't reassure them about a continued role in the future.

The reason is Moore's Law, which states that computing power doubles every couple of years. As applied to AI, people point out that ChatGPT has written everything from song lyrics to job applications within two months of launch. They assume, as the technology matures, within a couple of years it will be able to write feature articles and even entire novels.

I write a lot about technology in my job. So, I figured I'd write a two-part blogpost about it. This blogpost (Part 1) will explain how AIs like ChatGPT work. A second blogpost (Part 2) will explain what they can (and can't) do, and the likely long-term impacts on authors.

What is ChatGPT?

ChatGPT is described as an artificial intelligence, which is technically accurate, but a little bit confusing to a science fiction fan. So, just to clarify upfront - ChatGPT is not an intelligent sentient being like GLaDOS, the villainous AI from the computer game Portal.

In the 'artificial intelligence' space, AIs that can think like humans are called strong AI or Artificial General Intelligence (AGI). At the current time, no one has made an AGI and experts think it will take decades - if not longer - before we know how to build one.

Machine learning and neural networks

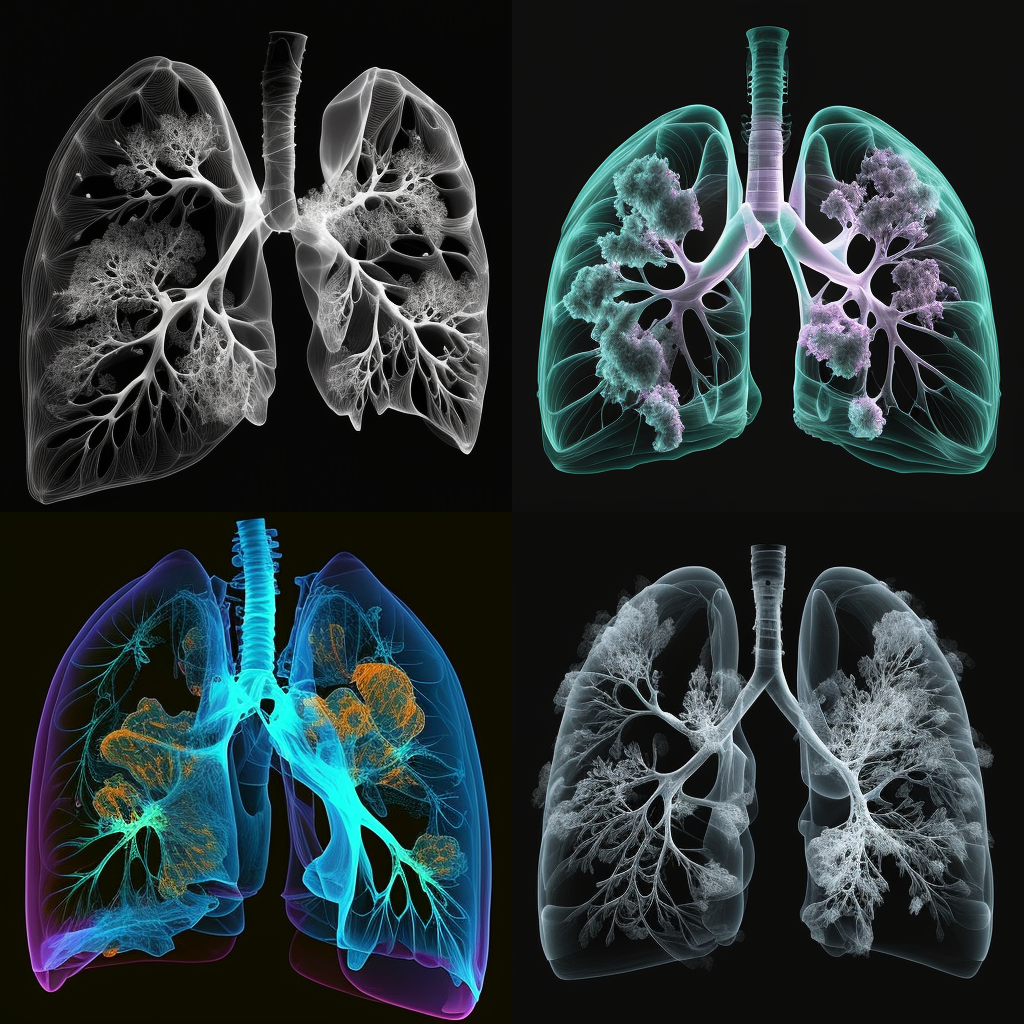

ChatGPT is what's called a large language model, which uses a subset of what's called machine learning. Like many machine learning tools, it's a computer program that uses large amounts of data to get good at a task - in this case, writing text. Machine learning is used everywhere these days, including for labelling Facebook photos and automatically detecting lung cancers from medical images.

Some machine learning tools, such as those for predicting whether someone will pay back a loan, rely on quite simple mathematical calculations. Increasingly, however, machine learning tool rely on what's called a neural network. Computer programs that rely on neural networks are called 'AIs' or, more properly 'weak AI' (to distinguish them from GLaDOS).

As per the video, neural networks were originally inspired by research into how the human brain works. They consist of artificial neurons, which take input values and calculate an output number and a weight, which are passed onto other neurons in the network. The weights are set based on the inputs, and the larger they are, the more likely other neurons are to activate.

If the neural network is asked to solve the same problem lots of times, the neurons that help solve the problem get more strongly weighted, and activate nearby neurons. Neurons that don't solve the problem, meanwhile, become more weakly connected (you can play with a neural network here).

In short, a 'trained' neural network uses the weights inside the artificial neurons to recognise patterns in input data and can go onto apply those patterns in new situations. In short, they learn how to do things without being specifically programmed to do so.

Supervised and unsupervised learning

A neural network, for example, could be given millions of X-ray images of lung cancers and - by doing so - could learn to recognise a lung cancer on a new X-ray image. This is called 'supervised learning'. Some neural networks, such as the language model behind ChatGPT, can recognise patterns in text and group images together without being specifically told what to look for - this is called 'unsupervised learning'.

Unsupervised learning is much faster than supervised learning. With supervised learning, to help the AI understand what to look for, a human has to manually label every tumour on a huge number of training images. With unsupervised learning, the neural network uses millions of images - or text documents - to train itself.

Deep learning

Neural networks that can learn unsupervised are VERY complex. Rather than relying on one layer of neurons, they consist of multiple layers. Each layer analyses a different aspect of an image, or text document, enabling the neural network to build complex models of reality. Because the neural network has multiple layers, these neural networks do what is called 'deep learning'.

Deep learning is designed to work in the same way as a toddler learns. The toddler points and says 'dog' and a parent says 'yes, that's a dog' or 'no, that's a cat', until the child learns each specific feature that only dogs possess. These features, for an deep-learning AI, might not be 'tail' or 'fur' - but, rather, some aspect of colour or edge shape of dog images. The AI gets better at identifying dogs in response to feedback by changing the weights of individual neurons.

Researchers have been working on deep learning in fits-and-starts since probably about the 1960s. Thus, ChatGPT and image-generation AIs MidJourney and DALL-E, aren't truly new - they're just the latest, and most experimental, applications of this technology.

But what's ChatGPT been trained to do?

ChatGPT is what's called a large language model (LLM), which is a deep learning algorithm (i.e. a mathematical computer programme) that has been trained to generate text. So, at the very simplest, if we imagine giving it the text:

The cat sat on the...

You might expect it to fill in with 'mat'. Or, if you gave it the sentence:

The ... sat on the sofa.

It might provide 'cat', 'dog' or 'rabbit'.

At the very simplest, LLMs work out which word to provide next by estimating the statistically most likely word from their training data. So, put very simply, if 'the cat sat on the...' is followed by 'mat' 99% of the time, and by 'dog' 1% of the time, it will tend to fill in the blank with 'mat'.

The earliest LLMs only did this, which meant they tended to lose context and write nonsense very quickly. This is because words only make sense within the context of the surrounding text. If we imagine we're writing about a dog called 'Adolf', for example, then 'hates' is much more statistically likely to be followed by 'postmen' than any of the historical alternatives.

According to this Medium article, a team at Google Brain worked out how to solve this problem in 2o17 by processing large chunks of text simultaneously in LLMs. Subsequent LLMs weigh the statistical probability of any individual word within the context of the rest of the text. So, a newer LLM would realise that 'Adolf' is a 'dog' who is statistically likely to 'hate' 'postmen'.

Enter, ChatGPT...

And, in the next blogpost, we'll discuss what ChatGPT can do - and not.

We'll also look into the future to decide if authors are obsolete.

I'll leave you with GLaDOS singing...